1. SVD 1

1. 키워드

- Singular Value Decomposition(특이값 분해)

2. Singular Value Decomposition (SVD)

Given a rectangular matrix \(A \in \mathbb{R}^{{m \times n}}\), its singular value decomposition is written as \(A=U \Sigma V^{T}\)

- where \(U \in \mathbb{R}^{m \times m}\), \(V \in \mathbb{R}^{n \times n}\): matrices with orthonormal columns, providing orthonormal basis of \(\operatorname{Col}A\) and \(\operatorname{Row}A\), respectively

- \(\Sigma \in \mathbb{R}^{m \times n}\): a diagonal matrix whose entries are in a decreasing order, i.e., \(\sigma_{1} \geq \sigma_{2} \geq \cdots \geq \sigma_{\min (m, n)}\).

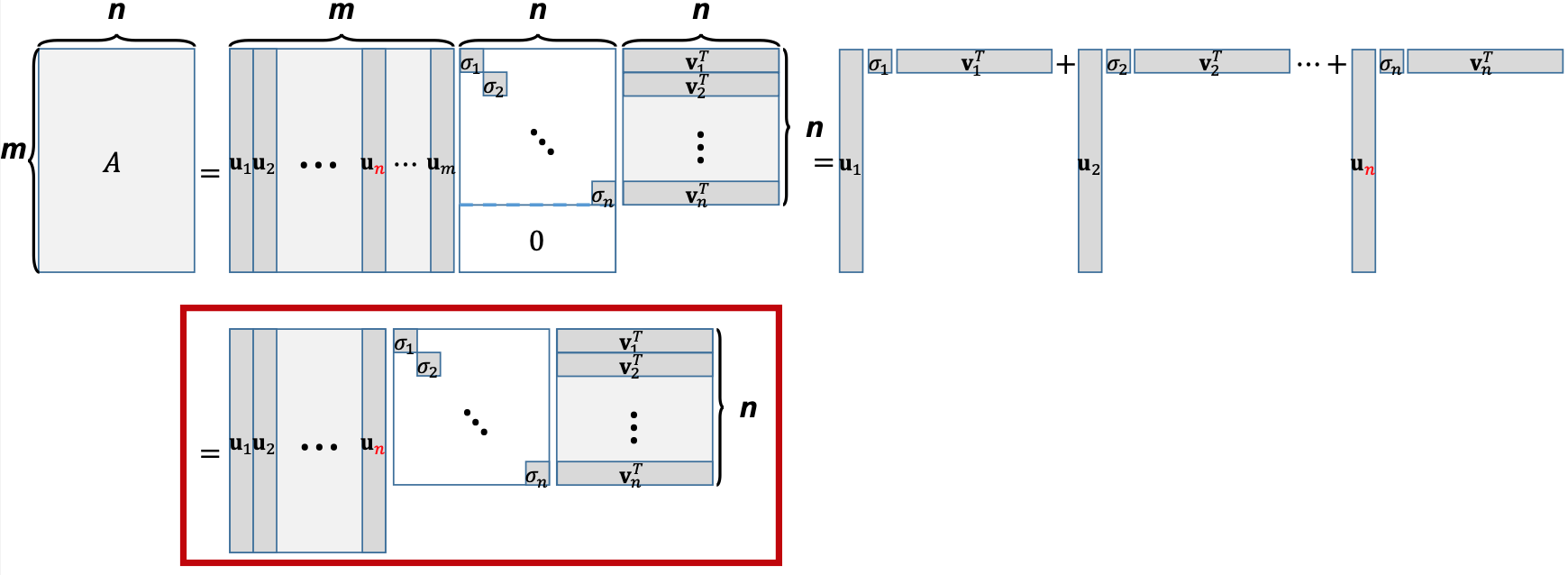

3. Basic Form of SVD

Given a matrix \(A \in \mathbb{R}^{m \times n}\) where \(m>n\), SVD gives \(A=U \Sigma V^{T}\).

4. SVD as Sum of Outer Products

\(A\) can also be represented as the sum of outer products \(A=U \Sigma V^{T}=\sum_{i=1}^{n} \sigma_{i} \mathbf{u}_{i} \mathbf{v}_{i}^{T}\), where \(\sigma_{1} \geq \sigma_{2} \geq \cdots \geq \sigma_{n}\).

5. Reduced Form of SVD

\(A\) can also be represented as the sum of outer products \(A=U \Sigma V^{T}=\sum_{i=1}^{n} \sigma_{i} \mathbf{u}_{i} \mathbf{v}_{i}^{T}\), where \(\sigma_{1} \geq \sigma_{2} \geq \cdots \geq \sigma_{n}\).

6. Another Perspective of SVD

We can easily find two orthonormal basis sets, \(\left\{\mathbf{u}_{1}, \ldots, \mathbf{u}_{n}\right\}\) for \(\operatorname{Col}A\) and \(\left\{\mathbf{v}_{1}, \ldots, \mathbf{v}_{n}\right\}\) for \(\operatorname{Row}A\), by using, say, Gram-Schmidt orthogonalization.

Are these unique orthonormal basis sets?

No. Then, can we jointly find them such that \(A \mathbf{v}_{i}=\sigma_{i} \mathbf{u}_{i}\), \(\forall i=1, \ldots, n\).

Let us denote \(U=\left[\begin{array}{llll}\mathbf{u}_{1} & \mathbf{u}_{2} & \cdots & \mathbf{u}_{n}\end{array}\right] \in \mathbb{R}^{m \times n}\), \(V=\left[\begin{array}{llll}\mathbf{v}_{1} & \mathbf{v}_{2} & \cdots & \mathbf{v}_{n}\end{array}\right] \in \mathbb{R}^{n \times n}\), and \(\Sigma=\left[\begin{array}{cccc}\sigma_{1} & 0 & \cdots & 0 \\0 & \sigma_{2} & \ddots & \vdots \\\vdots & \ddots & \ddots & 0 \\0 & \cdots & 0 & \sigma_{n}\end{array}\right] \in \mathbb{R}^{n \times n}\).

Consider \(A V=A\left[\begin{array}{llll}\mathbf{v}_{1} & \mathbf{v}_{2} & \cdots & \mathbf{v}_{n}\end{array}\right]=\left[\begin{array}{llll}A \mathbf{v}_{1} & A \mathbf{v}_{2} & \cdots & A \mathbf{v}_{n}\end{array}\right]\) and \(U \Sigma=\left[\begin{array}{llll}\mathbf{u}_{1} & \mathbf{u}_{2} & \cdots & \mathbf{u}_{n}\end{array}\right]\left[\begin{array}{cccc}\sigma_{1} & 0 & \cdots & 0 \\0 & \sigma_{2} & \ddots & \vdots \\\vdots & \ddots & \ddots & 0 \\0 & \cdots & 0 & \sigma_{n}\end{array}\right]=\left[\begin{array}{llll} \sigma_{1} \mathbf{u}_{1} & \sigma_{2} \mathbf{u}_{2} & \cdots & \sigma_{n} \mathbf{u}_{n} \end{array}\right]\)

\(A V=U \Sigma \Leftrightarrow\left[\begin{array}{llll}A \mathbf{v}_{1} & A \mathbf{v}_{2} & \cdots & A \mathbf{v}_{n}\end{array}\right]=\left[\begin{array}{llll}\sigma_{1} \mathbf{u}_{1} & \sigma_{2} \mathbf{u}_{2} & \cdots & \sigma_{n} \mathbf{u}_{n}\end{array}\right]\)

\(V^{-1}=V^{T}\) since \(V \in \mathbb{R}^{n \times n}\) has orthonormal columns.

Thus \(A V=U \Sigma \Leftrightarrow A=U \Sigma V^{T}\)